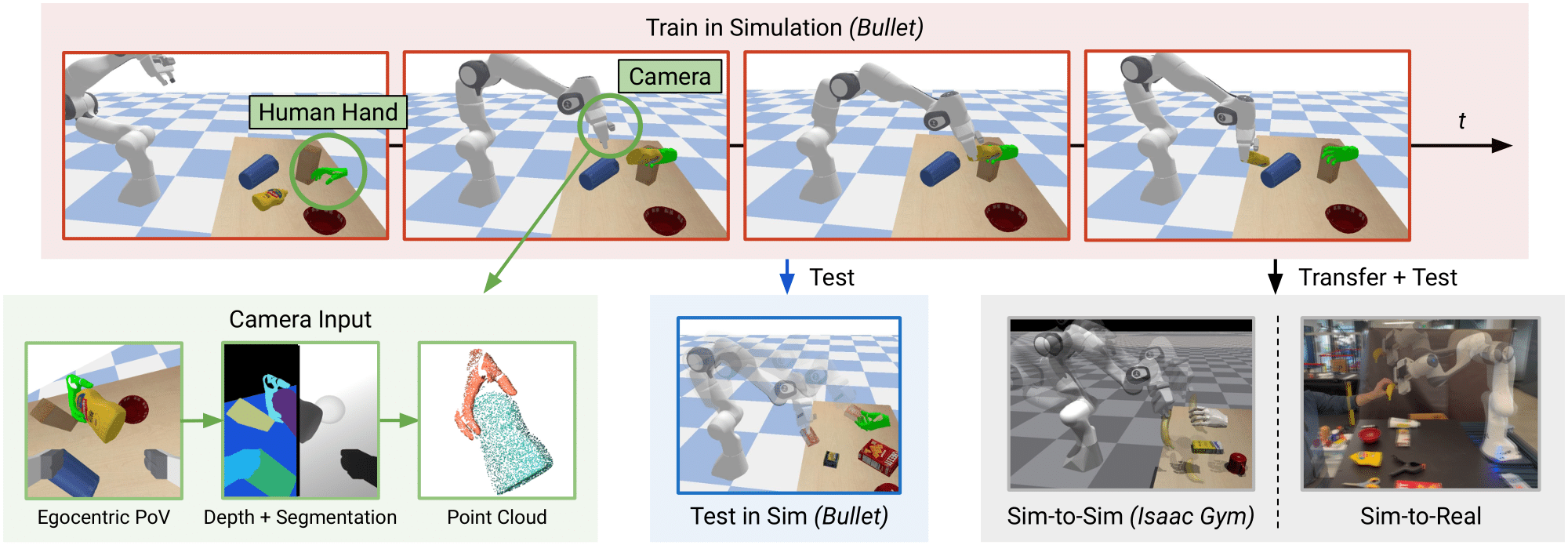

We propose the first framework to learn control policies for vision-based human-to-robot handovers, a critical task for human-robot interaction. While research in Embodied AI has made significant progress in training robot agents in simulated environments, interacting with humans remains challenging due to the difficulties of simulating humans. Fortunately, recent research has developed realistic simulated environments for human-to-robot handovers. Leveraging this result, we introduce a method that is trained with a human-in-the-loop via a two-stage teacher-student framework that uses motion and grasp planning, reinforcement learning, and self-supervision. We show a significant performance gain over baselines on a simulation benchmark, sim-to-sim transfer, and sim-to-real transfer.

Example of user 1 handing over several objects to the robot.

Example of user 2 handing over several objects to the robot.

While our method retains a 90% success rate in the user study, it is not without failures. Here we provide a compilation of failure cases from the user study.

@inproceedings{christen2023handoversim2real,

title = {Learning Human-to-Robot Handovers from Point Clouds},

author = {Christen, Sammy and Yang, Wei and Pérez-D'Arpino, Claudia and Hilliges, Otmar and Fox, Dieter and Chao, Yu-Wei},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023}

}